A universal jailbreak for bypassing AI chatbot safety features has been uncovered and is raising many concerns.

Related Posts

Gamers, stock up. Top-tier gaming accessories are up to 35% off at CORSAIR

WeTransfer’s confusing terms of service clause prompts reassurances that it doesn’t use uploaded files to train AI models.

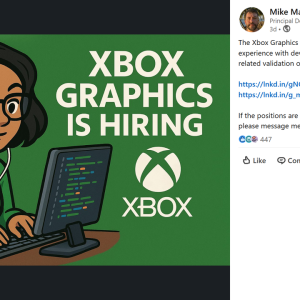

A Microsoft ad on LinkedIn is getting roasted for using a terrible AI-generated graphic to promote a graphic designer role…

Best Buy has launched a huge back-to-school laptop sale, so I’ve looked through it and hand-picked the 12 top laptop…

I tried Perplexity AI’s Comet browser that can search the web for you, and now I can’t go back to…

From Shakespeare to parenting: Google’s NotebookLM debuts themed ‘Featured Notebooks’ with The Economist & The Atlantic.

Can’t be bothered to clean the inside of your PC? Filters modelled on nasal hairs might keep your machine dust-free.

The Asus ROG Flow Z13 is an excellent 2-in-1 gaming laptop/tablet, but there are a few flaws that prevent it…

Looking for NYT Strands answers and hints? Here’s all you need to know to solve today’s game, including the spangram.